Driving SMARTS 2023.3

Objective

Objective is to develop a single-ego policy capable of controlling a single ego to perform a vehicle-following task in the

platoon-v0 environment. Refer to platoon_env() for environment details.

Important

In a scenario with multiple egos, a single-ego policy is replicated into every agent. Each agent is stepped

independently by calling their respective act function. In short, multiple

policies are executed in a distributed manner. The single-ego policy should be capable of accounting for and

interacting with other ego vehicles, if any are present.

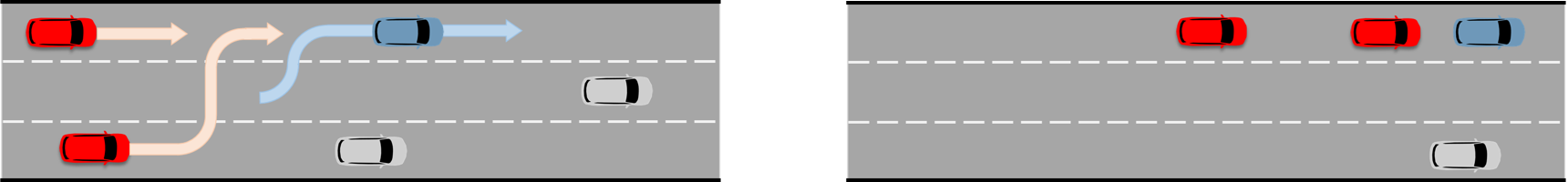

All ego agents should track and follow the leader (i.e., lead vehicle) in a single-file fashion. The lead vehicle is

marked as a vehicle of interest and may be found by filtering the

interest attribute of the neighborhood vehicles in the

observation.

Here, egos are in red colour, lead vehicle is in blue colour, and background traffic is in silver colour. (Left) At the start of episode, egos start tracking the lead vehicle. (Right) After a while, egos follow the lead vehicle in a single-file fashion.

The episode ends when the leader reaches its destination. Ego agents do not have prior knowledge of the leader’s destination. Additionally, the ego terminates whenever it collides, drives off road, or exceeds maximum number of steps per episode.

Any method such as reinforcement learning, offline reinforcement learning, behavior cloning, generative models, predictive models, etc, may be used to develop the policy.

Training scenarios

Several scenarios are provided for training as follows. The corresponding GIF image shows the task execution by a trained baseline agent.

Observation space

The underlying environment returns formatted Observation using

multi_agent

option as observation at each time point. See

ObservationSpacesFormatter for

a sample formatted observation data structure.

Action space

Action space for an ego can be either Continuous

or RelativeTargetPose. User should choose

one of the action spaces and specify the chosen action space through the ego’s agent interface.

Code structure

Users are free to use any training method and any folder structure for training the policy.

Only the inference code is required for evaluation, and therefore it must follow the folder structure and contain specified file contents, as explained below. The below files and folders must be present with identical names. Any additional files may be optionally added by the user.

inference

├── contrib_policy

│ ├── __init__.py

│ ├── policy.py

| .

| .

| .

├── __init__.py

├── MANIFEST.in

├── setup.cfg

└── setup.py

inference/contrib_policy/__init__.pyKeep this file unchanged.

It is an empty file.

inference/contrib_policy/policy.pyMust contain a

class Policy(Agent)class which inherits fromAgent.

inference/__init__.pyMust contain the following template code.

The template code registers the user’s policy in SMARTS agent zoo.

from contrib_policy.policy import Policy from smarts.core.agent_interface import AgentInterface from smarts.core.controllers import ActionSpaceType from smarts.zoo.agent_spec import AgentSpec from smarts.zoo.registry import register def entry_point(**kwargs): interface = AgentInterface( action=ActionSpaceType.<...>, drivable_area_grid_map=<...>, lane_positions=<...>, lidar_point_cloud=<...>, occupancy_grid_map=<...>, road_waypoints=<...>, signals=<...>, top_down_rgb=<...>, ) agent_params = { "<...>": <...>, "<...>": <...>, } return AgentSpec( interface=interface, agent_builder=Policy, agent_params=agent_params, ) register("contrib-agent-v0", entry_point=entry_point)

User may fill in the

<...>spaces in the template.User may specify the ego’s interface by configuring any field of

AgentInterface, except

inference/MANIFEST.inContains any file paths to be included in the package.

inference/setup.cfgMust contain the following template code.

The template code helps build the user policy into a Python package.

[metadata] name = <...> version = 0.1.0 url = https://github.com/huawei-noah/SMARTS description = SMARTS zoo agent. long_description = <...>. See [SMARTS](https://github.com/huawei-noah/SMARTS). long_description_content_type=text/markdown classifiers= Programming Language :: Python Programming Language :: Python :: 3 :: Only Programming Language :: Python :: 3.8 [options] packages = find: include_package_data = True zip_safe = True python_requires = == 3.8.* install_requires = <...>==<...> <...>==<...>

User may fill in the

<...>spaces in the template.User should provide a name for their policy and describe it in the

nameandlong_descriptionsections, respectively.Do not add SMARTS package as a dependency in the

install_requiressection.Dependencies in the

install_requiressection must have an exact package version specified using==.

inference/setup.pyKeep this file and its default contents unchanged.

Its default contents are shown below.

from setuptools import setup if __name__ == "__main__": setup()

Example

An example training and inference code is provided for this benchmark.

See the e11_platoon example. The example uses PPO algorithm from

Stable Baselines3 reinforcement learning library.

It uses Continuous action space.

Instructions for training and evaluating the example is as follows.

Train

Setup

# In terminal-A $ cd <path>/SMARTS/examples/e11_platoon $ python3.8 -m venv ./.venv $ source ./.venv/bin/activate $ pip install --upgrade pip $ pip install wheel==0.38.4 $ pip install -e ./../../.[camera-obs,argoverse,envision,sumo] $ pip install -e ./inference/

Train locally without visualization

# In terminal-A $ python3.8 train/run.py

Train locally with visualization

# In a different terminal-B $ cd <path>/SMARTS/examples/e11_platoon $ source ./.venv/bin/activate $ scl envision start # Open http://localhost:8081/

# In terminal-A $ python3.8 train/run.py --head

Trained models are saved by default inside the

<path>/SMARTS/examples/e11_platoon/train/logs/folder.

Docker

Train inside docker

$ cd <path>/SMARTS $ docker build --file=./examples/e11_platoon/train/Dockerfile --network=host --tag=platoon . $ docker run --rm -it --network=host --gpus=all platoon (container) $ cd /SMARTS/examples/e11_platoon (container) $ python3.8 train/run.py

Evaluate

Choose a desired saved model from the previous training step, rename it as

saved_model.zip, and move it to<path>/SMARTS/examples/e11_platoon/inference/contrib_policy/saved_model.zip.Evaluate locally

$ cd <path>/SMARTS $ python3.8 -m venv ./.venv $ source ./.venv/bin/activate $ pip install --upgrade pip $ pip install wheel==0.38.4 $ pip install -e .[camera-obs,argoverse,envision,sumo] $ scl zoo install examples/e11_platoon/inference $ scl benchmark run driving_smarts_2023_3 examples.e11_platoon.inference:contrib-agent-v0 --auto-install

Zoo agents

A compatible zoo agent can be evaluated in this benchmark as follows.

$ cd <path>/SMARTS

$ scl zoo install <agent path>

$ scl benchmark run driving_smarts_2023_3==0.0 <agent_locator> --auto_install